The advent of artificial intelligence (AI) has brought about a revolutionary change in technology, with potential advantages and risks that span various sectors. The European Union has recognized these challenges and has taken a bold step by enacting the AI Act, which came into effect on August 1st. This landmark legislation is designed to regulate the use, development, and deployment of AI systems within the EU. However, a pertinent question arises: How does this regulatory framework impact the software developers and programmers who create these AI systems?

The AI Act seeks to ensure that AI systems do not result in discriminatory, harmful, or unjust outcomes, particularly in sensitive areas like employment, healthcare, and education. Developed under the guidance of experts in computer science and law, the legislation categorizes AI applications into different risk tiers. High-risk AI systems, such as those used for screening job applications or determining credit scores, are subjected to stringent requirements. These regulations enforce standards that demand transparency, accountability, and fairness in AI operations, emphasizing the need for robust training data and systematic documentation.

According to Holger Hermanns, a computer science professor at Saarland University, the regulation’s primary objective is to address the potential dangers posed by AI, especially in its interactions with vulnerable populations. The crux of the legislation lies in its ability to safeguard citizens while fostering innovation—a delicate balance that poses challenges and opportunities for the tech industry.

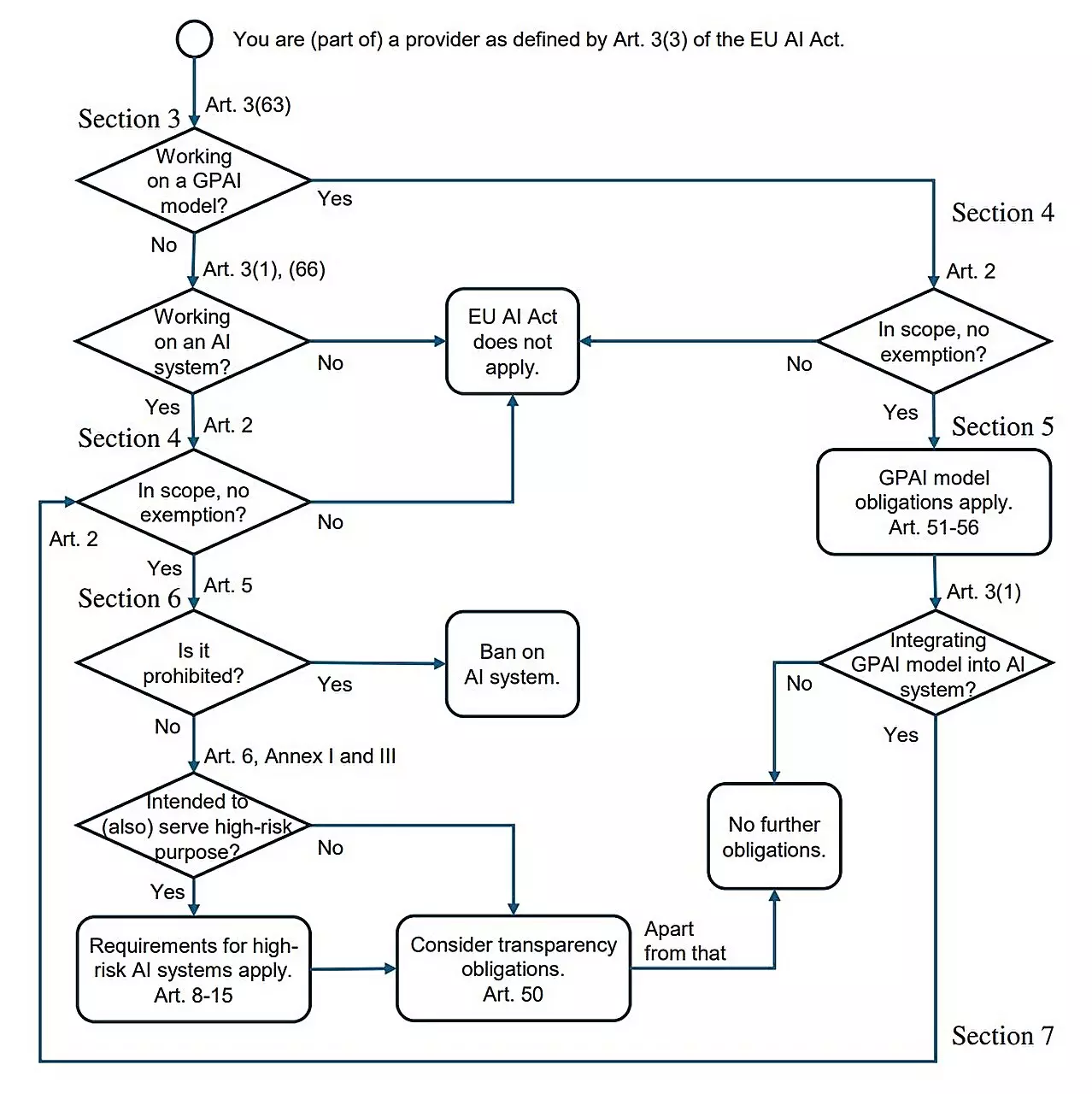

For programmers tasked with developing AI solutions, the implications of the AI Act can be daunting. Many in the field may find it difficult to interpret the intricacies of the 144-page document, prompting questions about the practical ramifications of compliance. Hermanns and a research team from Saarland University and the Dresden University of Technology have endeavored to provide clarity through their research paper titled “AI Act for the Working Programmer.” Their analysis attempts to demystify the regulations, especially concerning what developers actually need to know.

One of the study’s key takeaways is that the everyday work of most software developers will not drastically change; the regulations are predominantly focused on high-risk systems. Thus, AI applications that do not engage in sensitive decision-making processes will largely remain unaffected. For example, a program designed to filter job applications will have to comply with the Act’s requirements, while an AI developed for gaming or entertainment can thrive amid relative freedom under existing laws.

The Barriers for high-risk AI systems are rigorous. Developers will need to ensure their training data is representative and free from biases that could lead to discriminatory practices. This includes maintaining detailed logs and documentation of operational aspects—concepts akin to the “black box” in aviation that records flight data to track performance.

The AI Act mandates that providers must adequately inform users about their systems, allowing for continuous supervision and error detection. Such provisions illustrate a significant shift toward a more responsible approach to AI, where developers are not only creators but also custodians of ethical standards.

Despite these demands, the AI Act does not impose restrictions on research and innovation. This essential provision allows for the continued development of both public and private AI projects, ensuring that Europe does not lag behind international trends in technological advancement. This fosters a landscape conducive to ethical AI while encouraging developers to embrace creativity and exploration.

As the AI Act marks a crucial step in regulating artificial intelligence, its adoption serves as a model for future legislation worldwide. While the constraints introduced by the Act may seem formidable, experts believe that the net effect will primarily be on systems that necessitate rigorous oversight. For everyday applications, developers can continue their work without significant disruption.

The overall sentiment surrounding the AI Act is positive. It represents a foundational piece of legislation that provides a comprehensive framework for AI usage across Europe. By bringing together legal and technological perspectives, the Act aims to balance the dual imperatives of innovation and accountability in the burgeoning field of artificial intelligence.

While the AI Act may pose additional obligations for some developers, its long-term benefits could strengthen trust in AI systems. As AI continues to evolve, the legislation may inspire similar initiatives worldwide, setting a precedent for responsible AI governance tailored to protect users and guide developers in a rapidly changing landscape.

Leave a Reply