Artificial Intelligence (AI) continues to revolutionize how we process data, relying on algorithms to glean insights from extensive datasets. However, as the size and complexity of these datasets expands, traditional data-processing methods reveal a significant limitation known as the von Neumann bottleneck. This constraint results from the architecture of classical computing systems, which often leads to inefficiencies in data handling and computational speed. Researchers led by Professor Sun Zhong from Peking University have made strides toward addressing this problem with their innovative dual-in-memory computing (dual-IMC) scheme. Their findings, published in the journal Device, illustrate a path forward in enhancing both the performance and energy efficiency of AI systems.

To comprehend the significance of the dual-IMC approach, it is essential to first understand the von Neumann bottleneck itself. In traditional computing architectures, there exists a separation between the memory storage and the processing unit. This division causes a delay as data must move back and forth between memory (where it is stored) and the processor (where it is computed). The limitations of this setup become increasingly evident with the exponential growth of data being generated. As neural networks, which are foundational to AI, require matrix-vector multiplication (MVM) processes to function effectively, this delay leads to reduced performance and increased energy consumption, making it challenging to keep pace with the demands of modern applications.

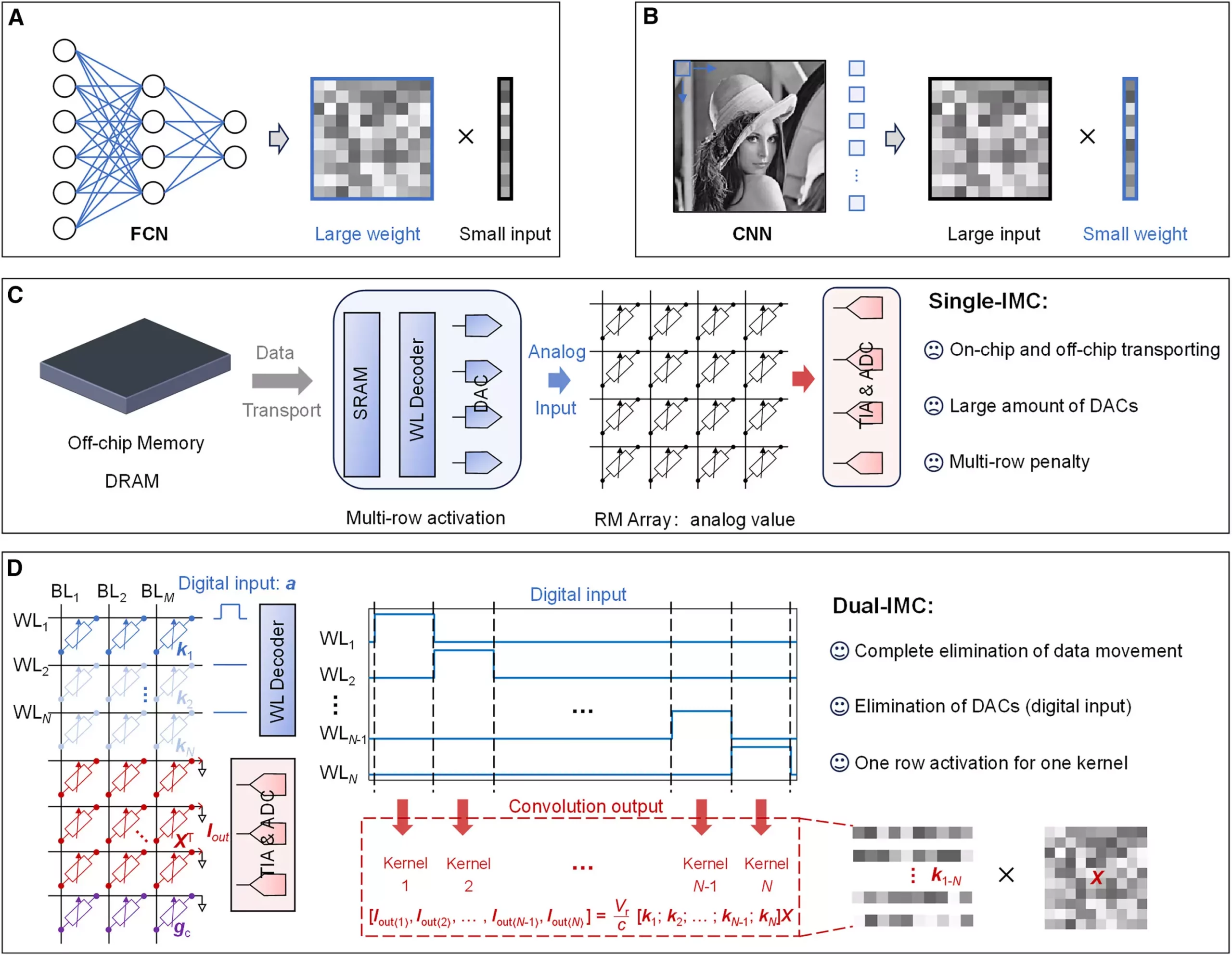

The primary breakthrough of dual-IMC lies in its reimagining of how neural network operations are executed. Unlike the conventional single-in-memory computing (single-IMC) method, which only holds the weights of a neural network in memory while relying on external inputs, dual-IMC enables both the weights and the inputs to be stored in the same in-memory environment. This comprehensive integration allows for data operations to occur internally, minimizing the need for off-chip data transfers.

The research team trialed this dual-IMC methodology using resistive random-access memory (RRAM) devices, generating promising results in areas such as signal recovery and image processing. By fully utilizing in-memory operations, dual-IMC demonstrates substantial benefits in several key areas.

The advantages of implementing a dual-IMC system are multifaceted. Firstly, by executing calculations directly in memory, the reliance on external dynamic random-access memory (DRAM) and static random-access memory (SRAM) for data retrieval is significantly reduced. This streamlining of the computing process translates into both energy savings and expedited processing times, as the need to shuttle data between separate storage units is eliminated.

Additionally, the dual-IMC system circumvents the drawbacks associated with digital-to-analog converters (DACs) that are integral to traditional architectures. These converters not only take up valuable circuit real estate but also contribute to higher power consumption and latency. By removing the requirement for DACs, the dual-IMC approach offers lower production costs and enables more efficient chip designs, thus enhancing the overall viability of advanced computing methods.

In an age of growing digital complexity, the necessity for efficient data processing cannot be overstated. As industries from finance to healthcare increasingly rely on sophisticated AI models, the findings from the dual-IMC research signal a transformative shift in computing architecture. This paradigm not only addresses the current limitations imposed by the von Neumann bottleneck but also paves the way for future advancements in AI capabilities.

The dual-IMC scheme marks a significant leap forward in our quest to optimize data processing in AI systems. By harnessing the power of integrated memory computing, researchers have opened doors to a new era of computing where efficiency, speed, and performance stand to benefit profoundly. As the digital landscape continues to evolve, innovations like dual-IMC will be vital in meeting the demands of tomorrow’s technological challenges.

Leave a Reply