In a groundbreaking study published in Nature, researchers from the Alberta Machine Intelligence Institute (Amii) have shed light on a critical issue in machine learning known as the “Loss of Plasticity in Deep Continual Learning.” This discovery has profound implications for the development of advanced artificial intelligence that can adapt and learn continuously in real-world scenarios. The researchers, led by A. Rupam Mahmood and Richard S. Sutton, have identified a fundamental problem that plagues deep learning models and hinders their ability to perform effectively over time.

The crux of the issue lies in the phenomenon of “loss of plasticity,” where deep learning agents engaged in continual learning gradually lose the ability to acquire new knowledge and experience a significant decline in performance. Not only do these AI agents struggle to learn new information, but they also fail to retain previously acquired knowledge after it has been forgotten. This poses a major challenge in developing artificial intelligence systems that can handle the complexity of the real world and achieve human-level cognitive abilities.

One of the key limitations highlighted by the researchers is the lack of support for continual learning in many existing deep learning models. For instance, models like ChatGPT are typically trained for a fixed duration and then deployed without further learning. Integrating new information into these models’ memory can be challenging, often requiring a complete retraining from scratch. This approach not only consumes significant time and resources but also restricts the scope of tasks that the models can effectively perform.

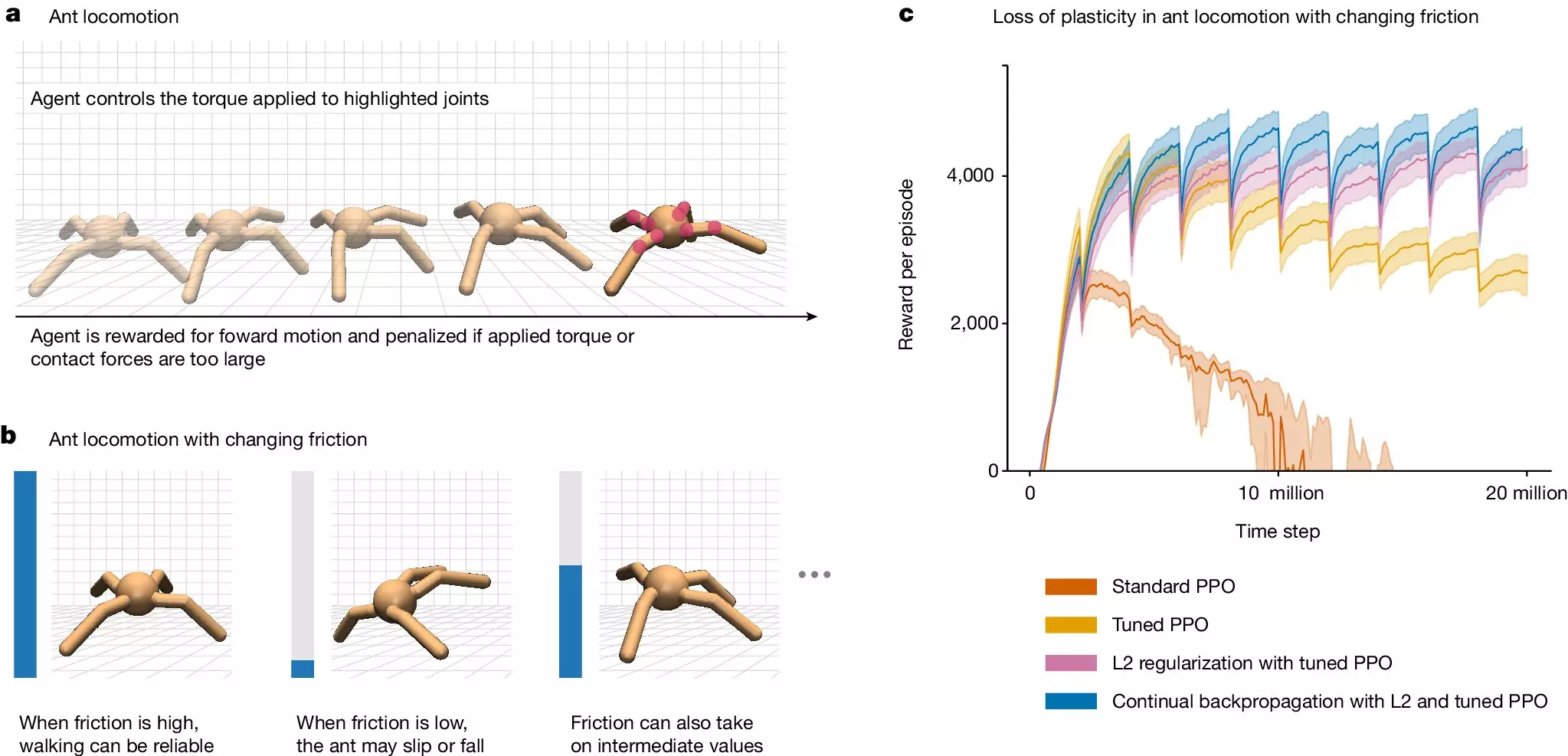

To investigate the pervasiveness of loss of plasticity in deep learning systems, the research team conducted a series of experiments involving supervised learning tasks. They observed a gradual decline in the network’s ability to differentiate between various classes as it continued to learn new tasks, indicating a loss of plasticity over time. By documenting these findings, the researchers aimed to raise awareness about the critical nature of this issue in the deep learning community.

In their quest to address loss of plasticity, the researchers devised a novel method known as “continual backpropagation.” This approach involves periodically reinitializing ineffective units within the neural network with random weights during the learning process. By implementing continual backpropagation, the researchers found that models could sustain continual learning for extended periods, potentially indefinitely. This innovative solution offers a promising avenue for mitigating the impact of loss of plasticity in deep learning networks.

While the research team acknowledges that further refinements and alternative solutions may be developed to combat loss of plasticity, the success of continual backpropagation underscores the possibility of overcoming this inherent challenge in deep learning models. By drawing attention to this critical issue, the researchers hope to spark greater interest and exploration within the scientific community to address the fundamental issues plaguing deep learning. Through collaborative efforts and innovative approaches, the field of artificial intelligence can progress towards achieving more robust and adaptive AI systems.

Leave a Reply