The advancement of artificial intelligence, particularly in natural language processing, has opened avenues previously thought unattainable. As these systems evolve, the necessity for greater precision and reliability in responses becomes critical. Traditional language models often stumble when faced with complex prompts, leading to inaccuracies that can have tangible repercussions, especially in fields like medicine or mathematics. Researchers at MIT have developed a novel solution to enhance collaboration and accuracy between large language models (LLMs) through a method known as Co-LLM, giving a fresh perspective on model interaction.

Imagine a scenario where you’re asked to elaborate on a complex topic but only possess a fragment of the knowledge required. Instead of risking misinformation, it’s wise to consult someone more familiar with the subject. Similarly, LLMs can benefit from collaboration, but a significant challenge remains: instructing these models when to seek assistance from a more specialized counterpart. The traditional methods, often reliant on elaborate formulas or extensive labeled datasets, are cumbersome and inefficient.

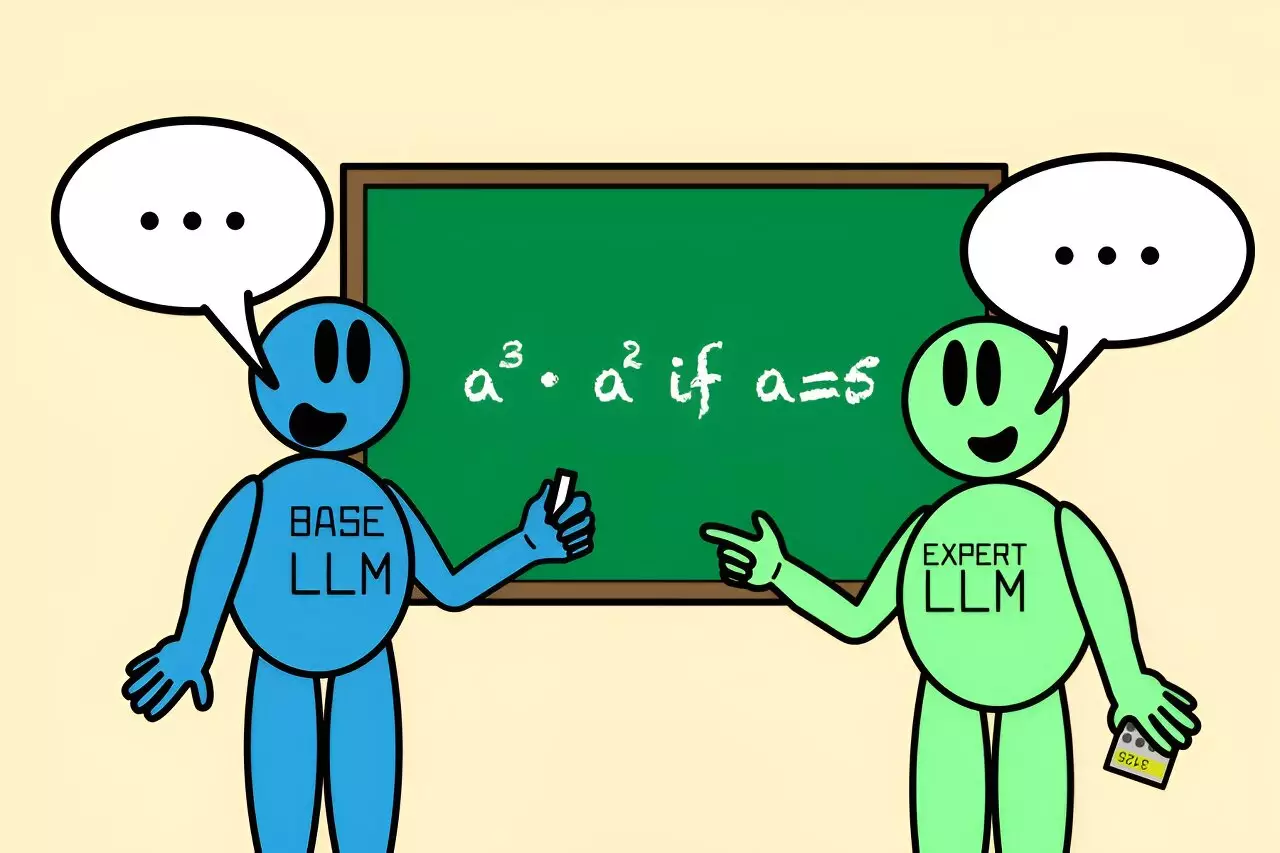

MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) has proposed Co-LLM, a pioneering algorithm designed to streamline this collaborative process. By pairing a general-purpose model with a more specialized one, Co-LLM allows for a dynamic interaction in which the general model generates content while simultaneously evaluating its output and assessing areas that may require more expertise.

The essence of Co-LLM lies in its unique operation of utilizing a “switch variable.” This variable acts like an intelligent project manager, assessing the proficiency of each word produced by the general model in real-time. When a word or phrase needs precision that the general model lacks, the switch variable seamlessly activates the expert model. This collaboration means that users benefit from more accurate information, whether it’s in answering complex medical inquiries or solving intricate math problems.

For instance, if tasked with identifying ingredients in a prescription drug, the general-purpose model might generate an incomplete or erroneous answer. When integrated with specialized medical data, the algorithm activates the expert model only where precision is necessary, yielding a markedly better output. This adaptability showcases Co-LLM’s potential to convert a simple model into a sophisticated information aggregator capable of providing detailed insights.

The team behind Co-LLM emphasizes the organic learning capability of the algorithm. By training the base model with domain-specific data, Co-LLM instructs it to recognize its limitations and seek the expertise of specialized models. This experience mirrors human interaction: we learn to collaborate by recognizing when we lack knowledge and seeking assistance.

Moreover, Co-LLM isn’t restricted to specific fields. The algorithm has been successfully applied to diverse areas, such as biomedical tasks and mathematical reasoning tests. Its ability to directly route questions to the appropriate expert models allows for enhanced accuracy in areas where conventional models falter.

In many instances, current collaboration techniques require all involved models to operate concurrently, leading to inefficiencies and increased resource consumption. Co-LLM refines this process by allowing the expert model to contribute only when necessary, greatly improving the overall efficiency of data processing. This token-level activation means that resources are allocated only when needed, preserving bandwidth and computational power.

The “after-action” analysis that Co-LLM can perform also opens pathways for further improvements. Researchers are exploring enhancements that would permit the base model to backtrack and re-engage the expert model if a given response fails to meet accuracy expectations. Such capabilities could address situations where even specialized models do not provide satisfactory answers, thus heightening reliability.

As Co-LLM continues to develop, the potential applications seem boundless. From assisting enterprise-level documentation to ensuring that small, specialized models remain relevant by continually training against current data, the implications for businesses and industries alike are substantial.

Colin Raffel, an expert in the field, lauds Co-LLM’s granular flexibility, emphasizing its unique approach to model routing. By allowing models to make token-level decisions rather than operating as a monolithic entity, Co-LLM significantly improves upon traditional AI collaboration strategies.

As artificial intelligence shapes the future landscape of knowledge acquisition and information dissemination, the innovations exemplified by Co-LLM will likely play a crucial role. By mimicking human-like collaboration, the algorithm not only improves the accuracy of responses but also paves the way for intelligent communication among various AI systems, heralding a new era in language model performance.

Leave a Reply