Artificial intelligence (AI) technology has been rapidly advancing in recent years, with applications such as generative AI pushing the scalability of existing digital hardware to its limits. As a result, there is a growing interest in analog hardware specialized for AI computation. A recent study, published in Science Advances, has shed light on the potential of analog hardware using Electrochemical Random Access Memory (ECRAM) devices to optimize computational performance in AI, paving the way for potential commercialization.

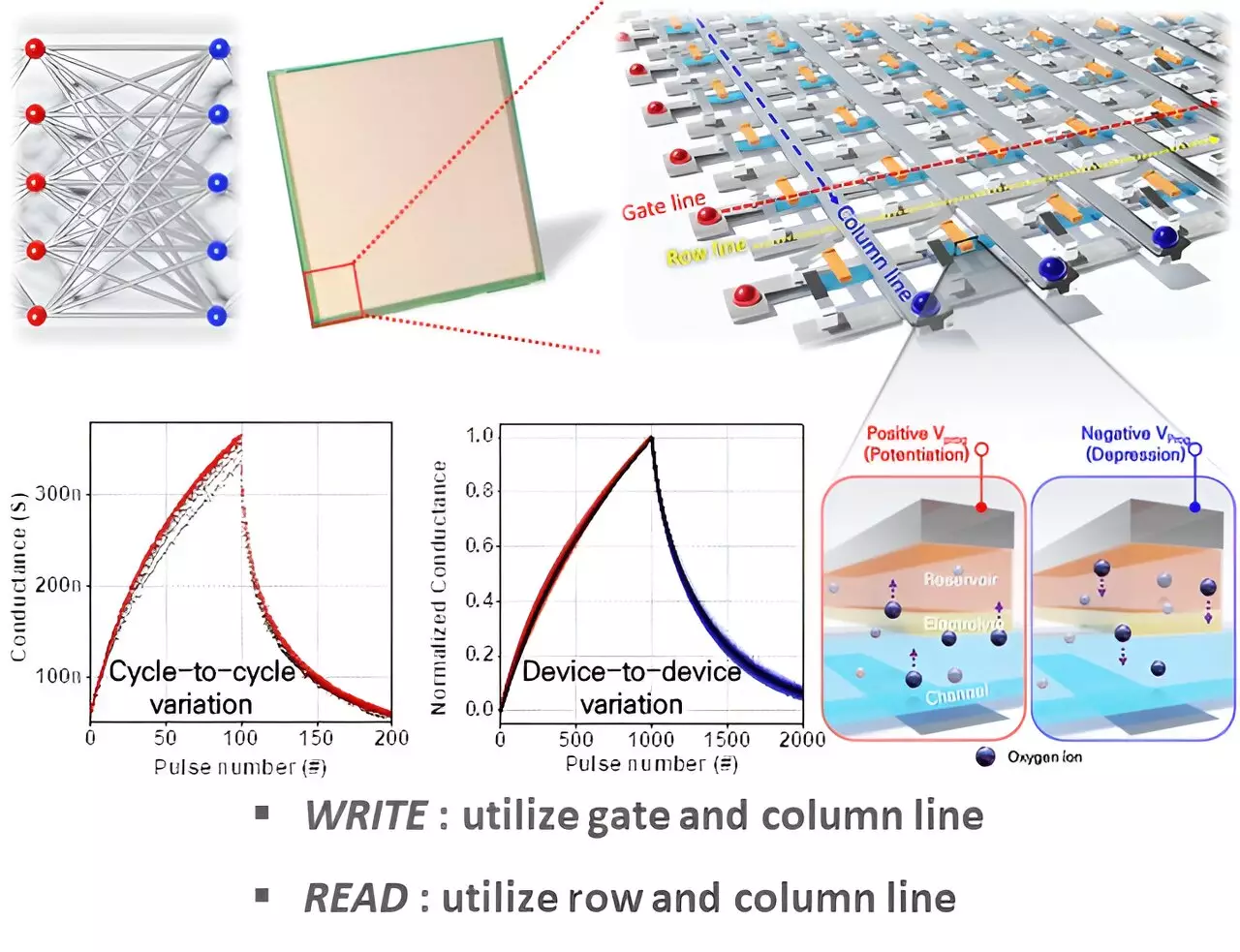

Digital hardware such as CPUs, GPUs, and ASICs have been the backbone of AI computation, but their scalability for tasks like continuous data processing and computational learning is limited. Analog hardware, on the other hand, offers advantages for specific computational tasks by adjusting semiconductor resistance based on external factors and using a cross-point array structure with vertically crossed memory devices to process AI computation in parallel. Despite these advantages, meeting the diverse requirements for AI computation remains a challenge.

The research team, led by Professor Seyoung Kim, focused on ECRAM devices for their study. ECRAM devices manage electrical conductivity through ion movement and concentration, offering a unique three-terminal structure with separate paths for reading and writing data. This allows for operation at relatively low power compared to traditional semiconductor memory devices. In their experiments, the team successfully fabricated ECRAM devices in a 64×64 array, showcasing excellent electrical and switching characteristics, along with high yield and uniformity.

One notable aspect of the study was the application of the Tiki-Taka algorithm, an analog-based learning algorithm, to the high-yield ECRAM hardware. This led to the maximization of accuracy in AI neural network training computations. The researchers also highlighted the importance of the “weight retention” property of hardware training on learning, demonstrating that their technique does not overload artificial neural networks. These findings have significant implications for commercializing the technology, showing promise for future AI applications.

Scaling Up ECRAM Devices

An interesting aspect of the research is the scale at which the ECRAM devices were implemented. While the largest array of ECRAM devices for storing and processing analog signals reported in literature was 10×10, the researchers successfully implemented a 64×64 array with varied characteristics for each device. This scalability demonstrates the potential for ECRAM devices to be used on a larger scale for AI computation, opening up new possibilities for accelerating artificial intelligence.

The research on analog hardware using ECRAM devices represents a significant step forward in optimizing AI computation. By addressing the limitations of digital hardware and showcasing the potential for commercialization, this study lays the groundwork for future advancements in AI technology. With further research and development, analog hardware may play a key role in accelerating artificial intelligence and unlocking new possibilities in the field.

Leave a Reply